Facial recognition technology: Kmart case

Today I read the investigation report on the Australia’s Kmart, a retail giant found to have used the facial recognition technology (FRT) on ALL customers for 2 years without consent. What happens when noble intentions clash with a lack of proper governance?

Has it happened befofe?

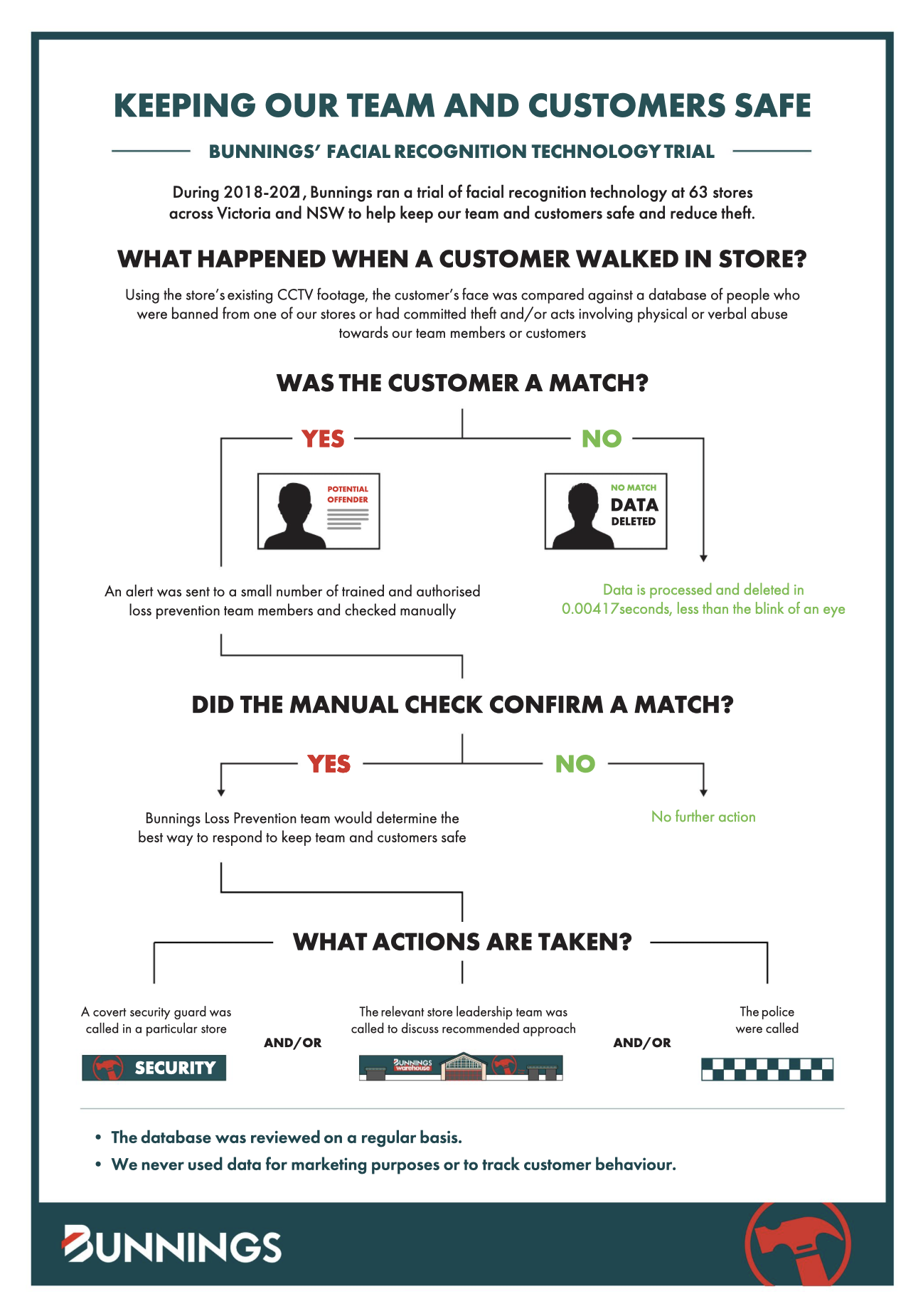

Yes! In 2024, Bunnings, a major DIY store chain, was found to have use FRT in their stores too, the same story - different reasons. For Bunnings, it was about preventing customers’ aggression towards staff. The store management was requested to publish an explanation of how exactly the technology work - which they did.

(Source: https://www.bunnings.com.au/about-us/facial-recognition-technology)

(Source: https://www.bunnings.com.au/about-us/facial-recognition-technology)

Kmart used the tech to try fight refund fraud - things like returning stolen items or swapping barcodes, a serious problem: in the US alone, around 15% of returns involved some kind of fraud in 2024, costing billions.

Why did the Privacy Commissioner rule that it is not OK?

Despite the good reasons behind the decision to deploy the technology, the Privacy Commissioner ruled Kmart had violated the Privacy Law (in Australia, there is no AI-specific legislation so the existing laws are applied). “Just because a technology may be helpful or convenient, does not mean its use is justifiable”, she said, bringing up the topic of proportionality: the benefit of using a technology must clearly and overwhelmingly outweigh the privacy risks it poses. “Faceprints” the store collected, even if not stored for more than a second, were used for matching, which turns a photo from personal to sensitive information - a very important distinction.

The system scanned everyone who walked in, collecting biometric data from hundreds of thousands of people, not just the few bad actors. But it was not very good at its job: the amount of fraud prevented was tiny compared to the overall problem. Massive privacy risk to every customer was not outweighed by the very limited benefits.

Importantly, the company never properly explored less privacy-invasive alternatives like better staff training or stricter return policies. The Commissioner emphasized that the company did not need to find an alternative that was equally effective at preventing fraud. Instead, they just had to show they had properly considered and rejected other, less privacy-invasive methods. The company failed to do this.

Finally, while the company did display an “updated” Conditions of Entry Notice at the store entrance, stating that the store had “24-hour CCTV coverage, which includes facial recognition technology”, many stores were using the tech for months before this notice was displayed, meaning a vast number of customers were unaware of the technology’s use when they entered. The privacy policy, which was in effect for over a year after the pilot began, did not mention facial recognition at all.

This isn’t about shaming companies. Neither Bunnings nor Kmart were fined. It’s a reminder for all organisations: transparency, necessity, and proportionality are the key considerations when deploying facial recognition systems like this.

What are your thoughts on this? Should companies have the right to use FRT to protect their business, even if it impacts the privacy of every customer?